A method for estimating 6D poses of textureless objects in the manufacturing sites using an image-based data-driven technique

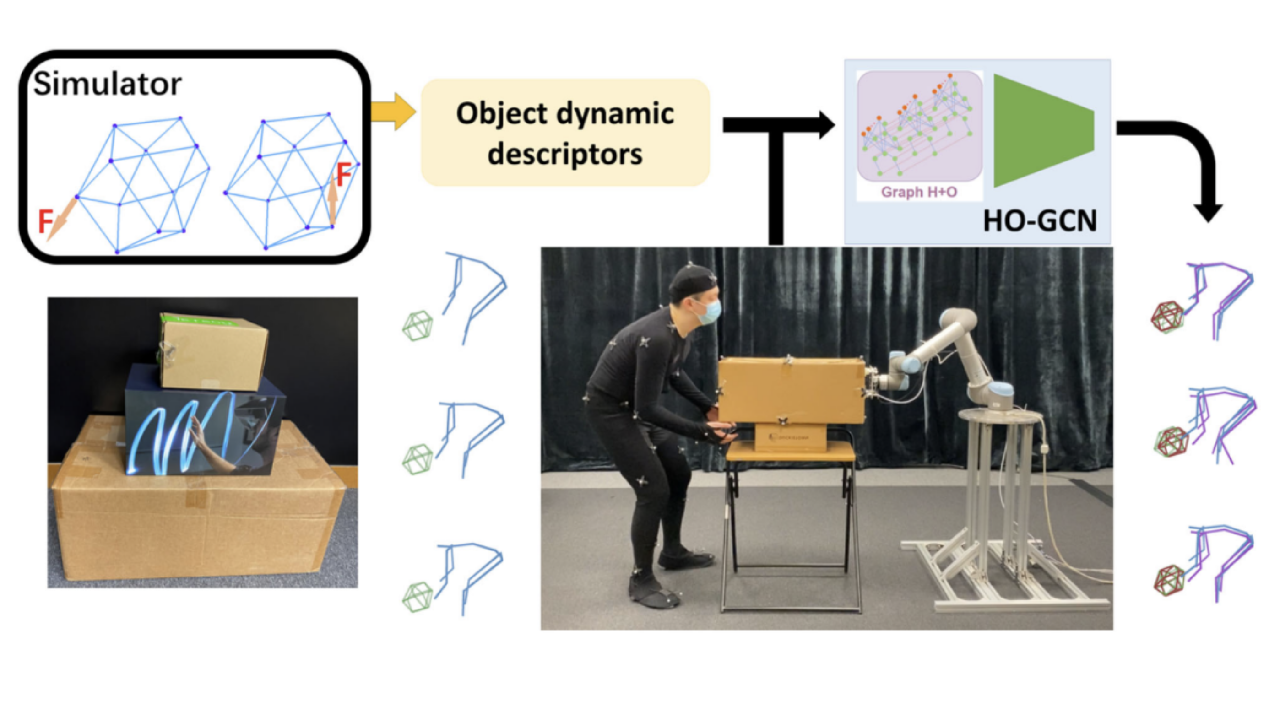

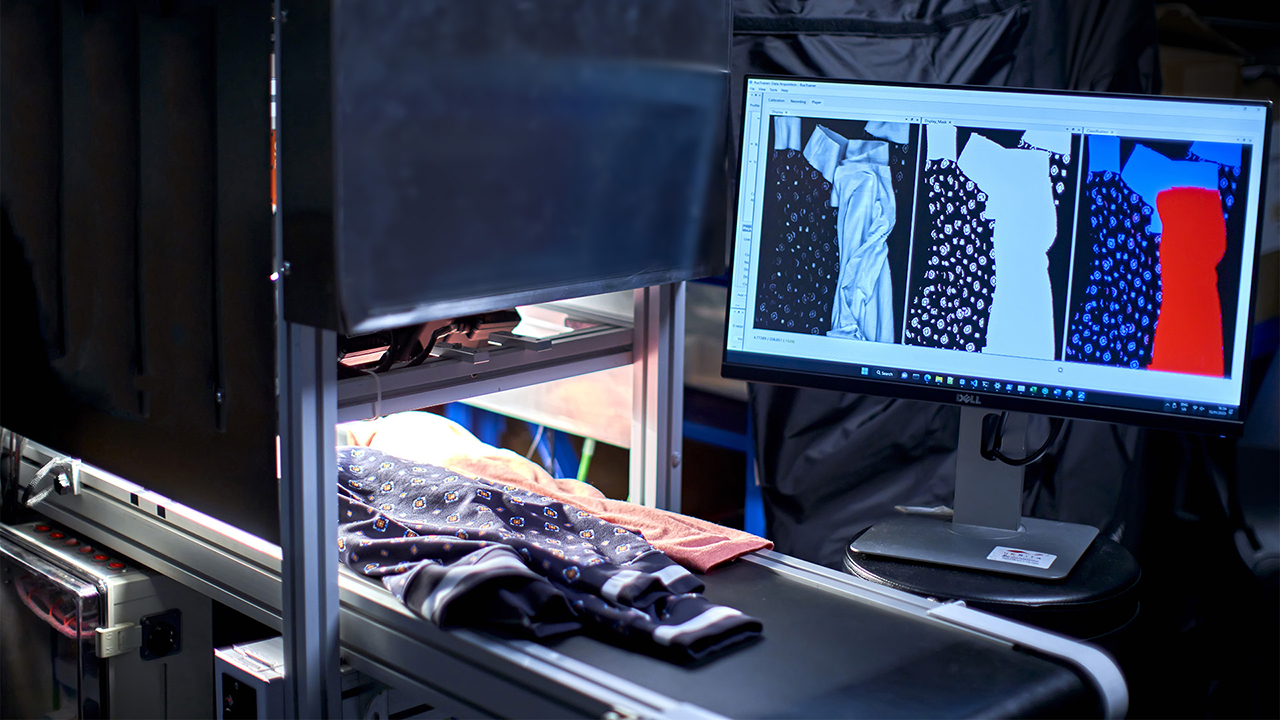

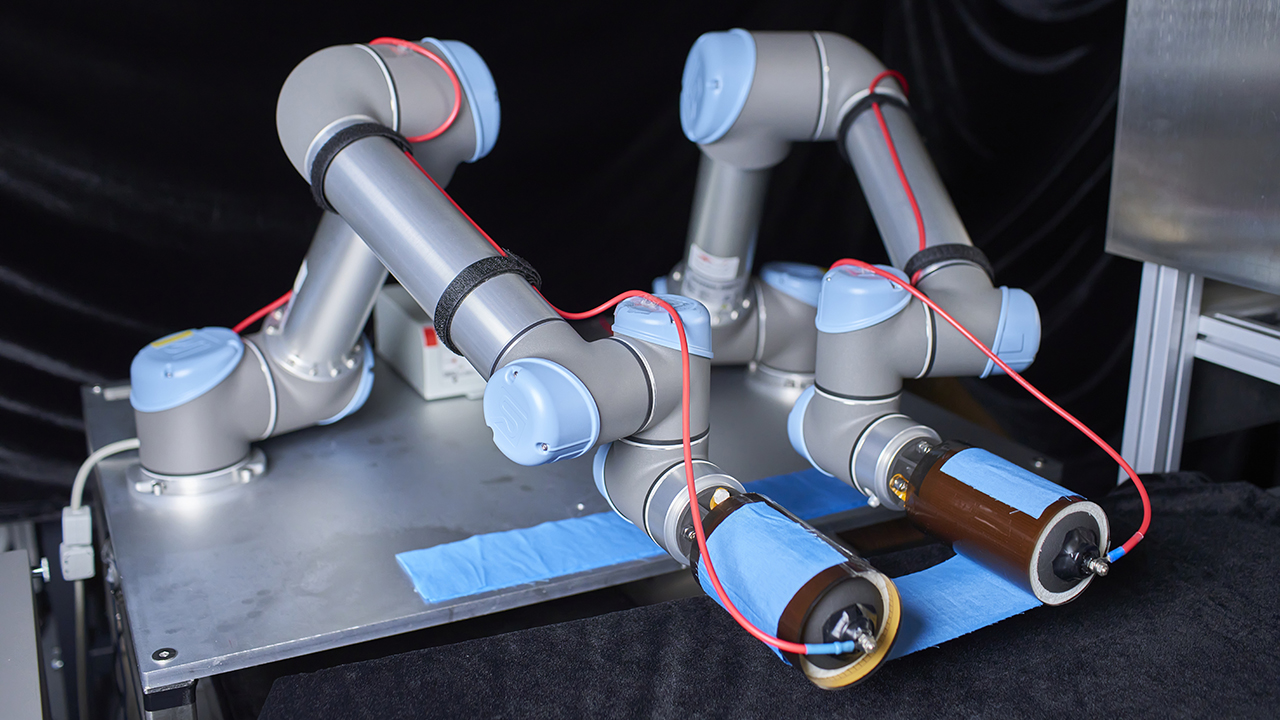

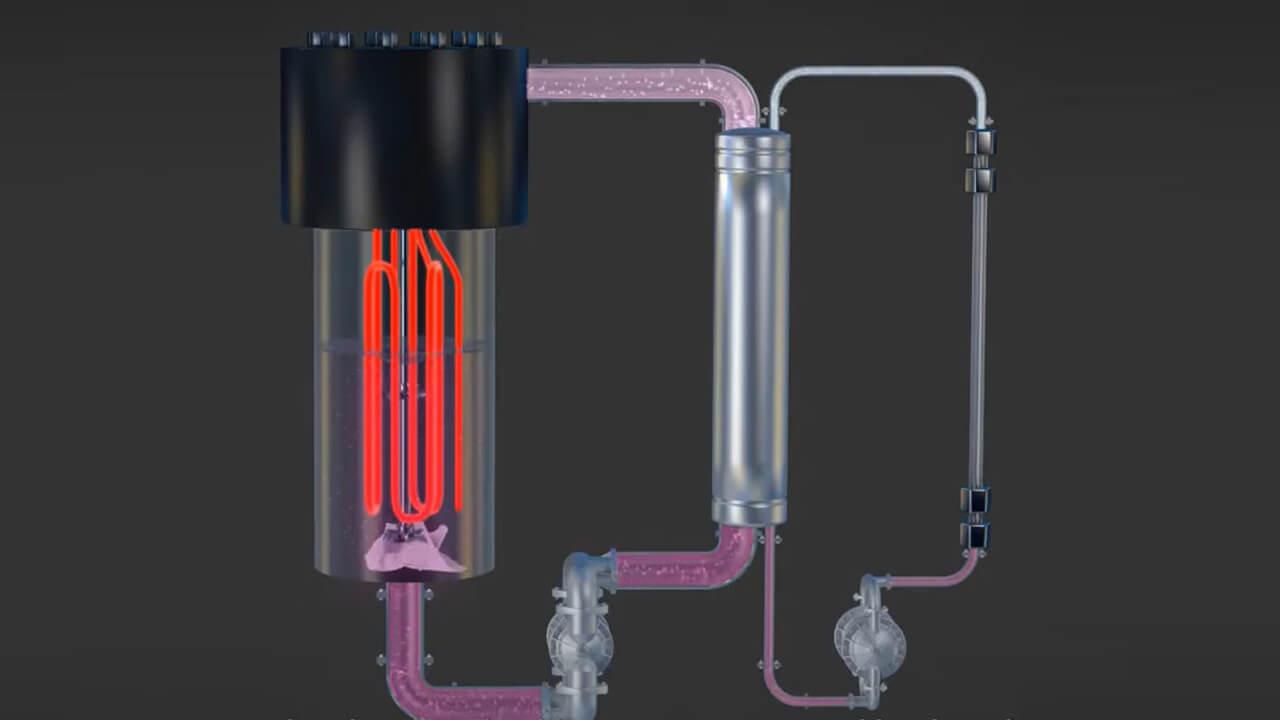

This method takes as input an RGB image of a scene and estimates the 6D pose (location and orientation) of a target object in the image. This technology is applicable to scenarios where the 6D pose of a target rigid object is desired.

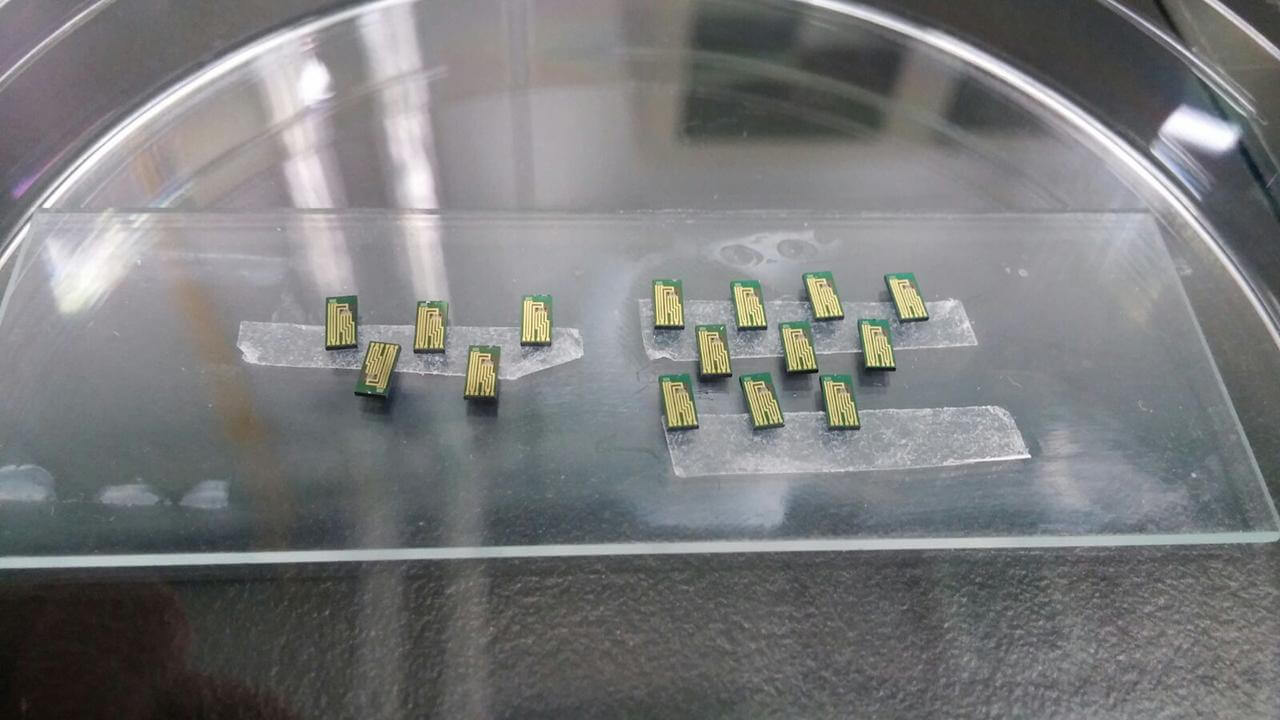

6D pose estimation of rigid objects is the key to applying human-robot collaboration for automated processes in industrial environments. Estimating 6D poses of rigid objects from RGB images is challenging. This is especially true for textureless objects with strong symmetry, since they have only sparse visual features to be leveraged for the task and their symmetry leads to pose ambiguity.

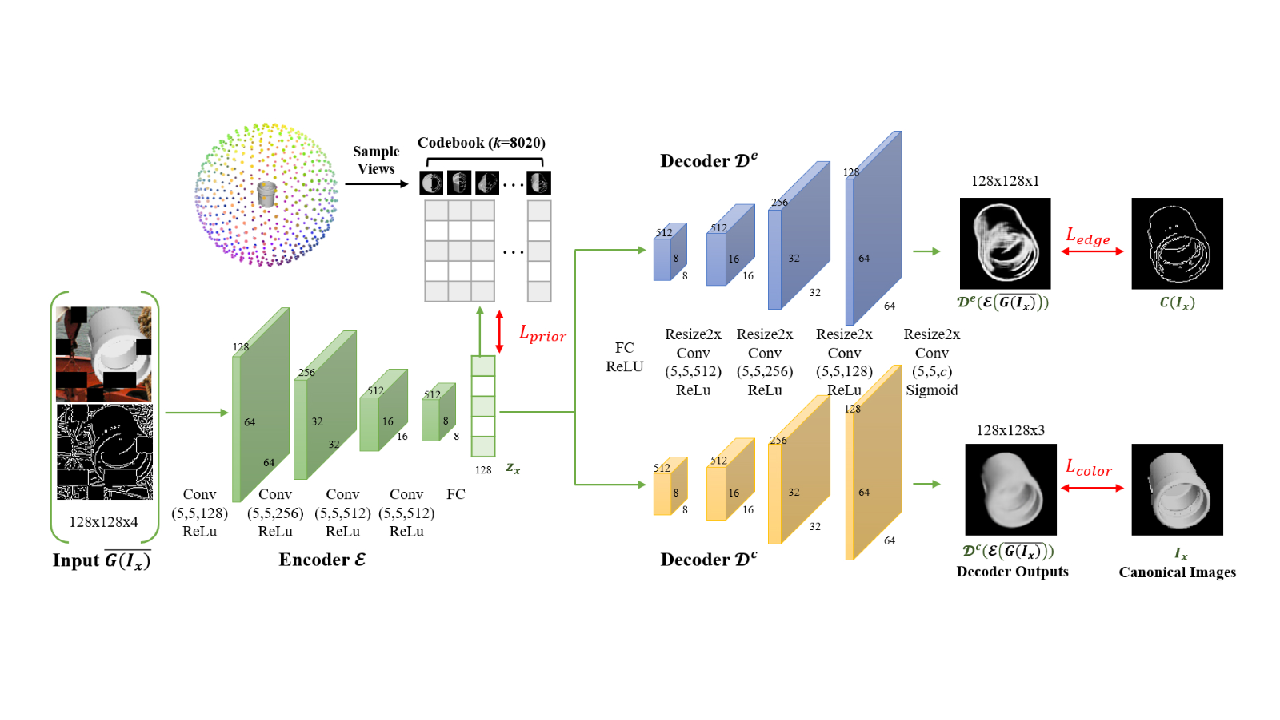

- By using edge cues to complement the color images with more discriminative features and reduce the domain gap between the real images for testing and the synthetic ones for training.

- Data-driven approaches require a large number of human-labeled data for training. We proposed a self-supervision scheme (without the use of labeled data) to enforce a geometric prior in the learned pose representations. This geometric prior encourages the learned representations of two RGB images presenting nearby rotations to be similar to each other. Hence, the estimated results of our method are more reliable and robust due to this geometric prior.

- Detecting rigid objects and estimating their 6D poses from images is fundamental in robotics and computer vision and critical for applications like robotic grasping and augmented reality.

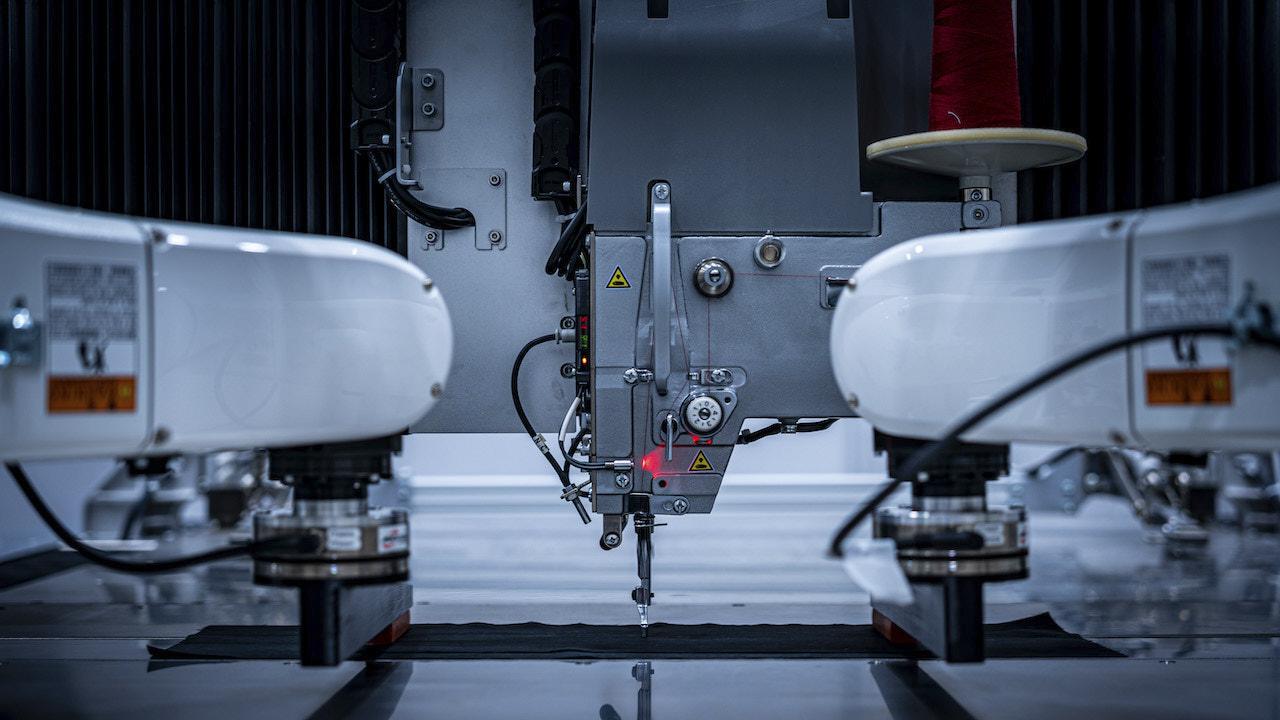

- This technology ehances safety and productivity by facilitating the human-robot collaboration in industrial enviroment.

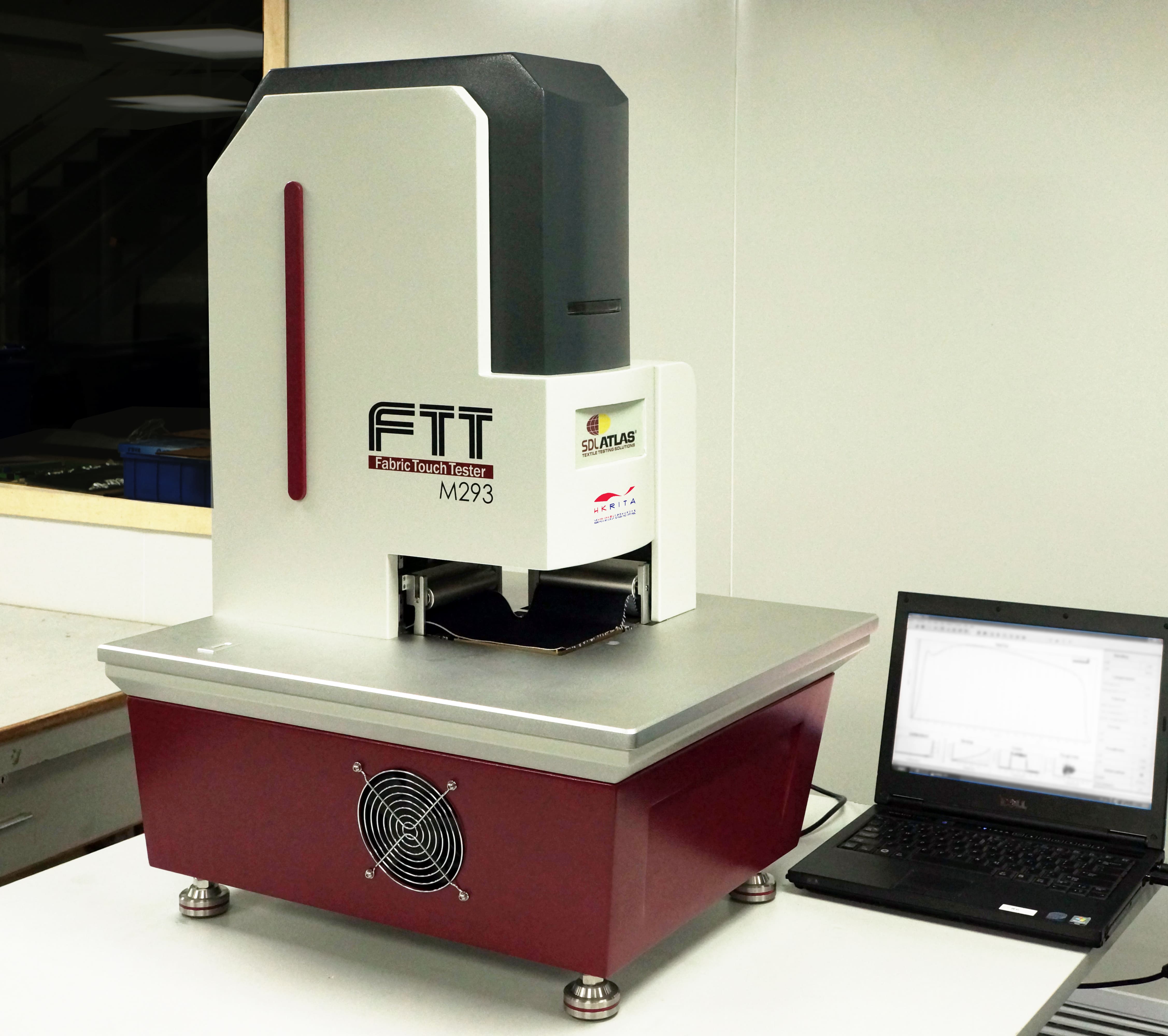

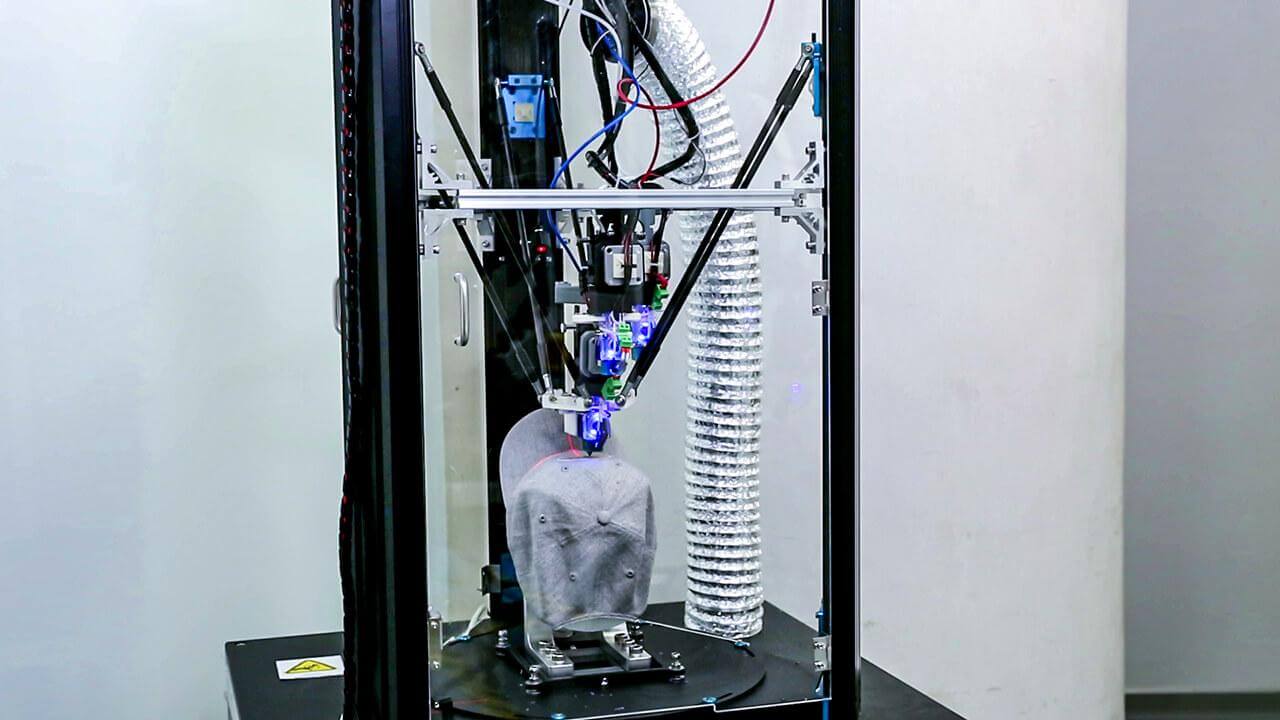

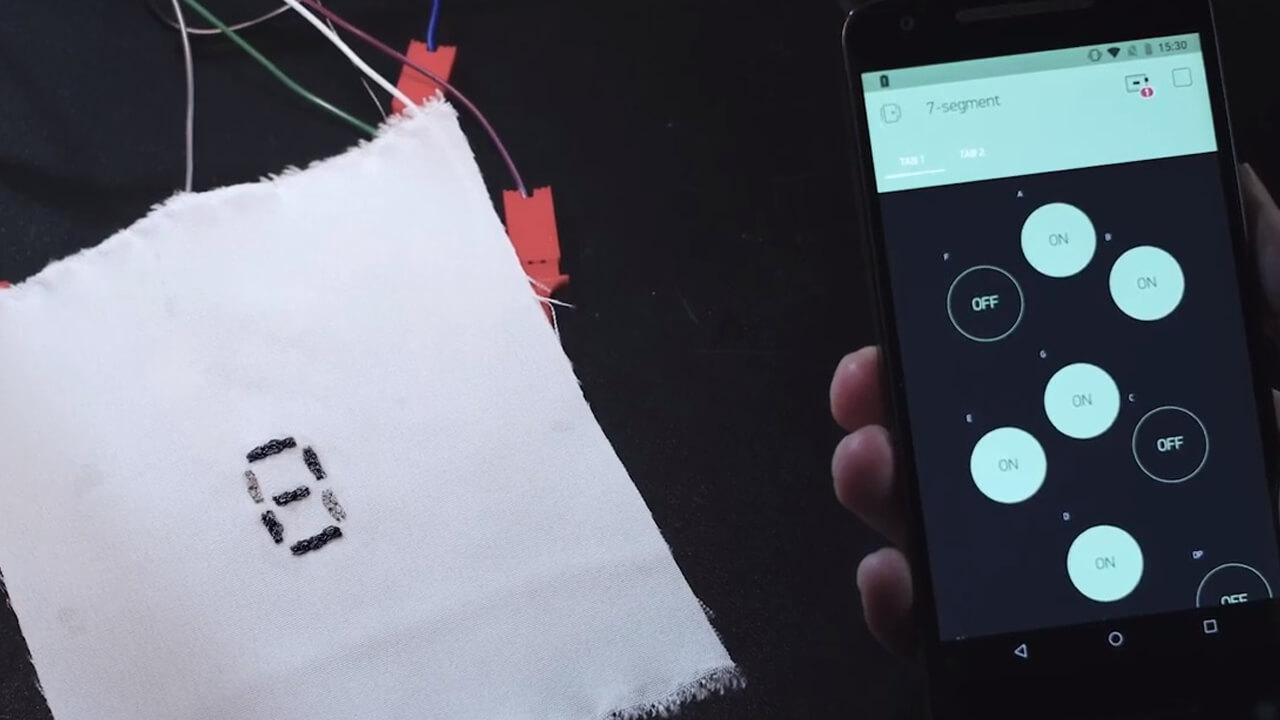

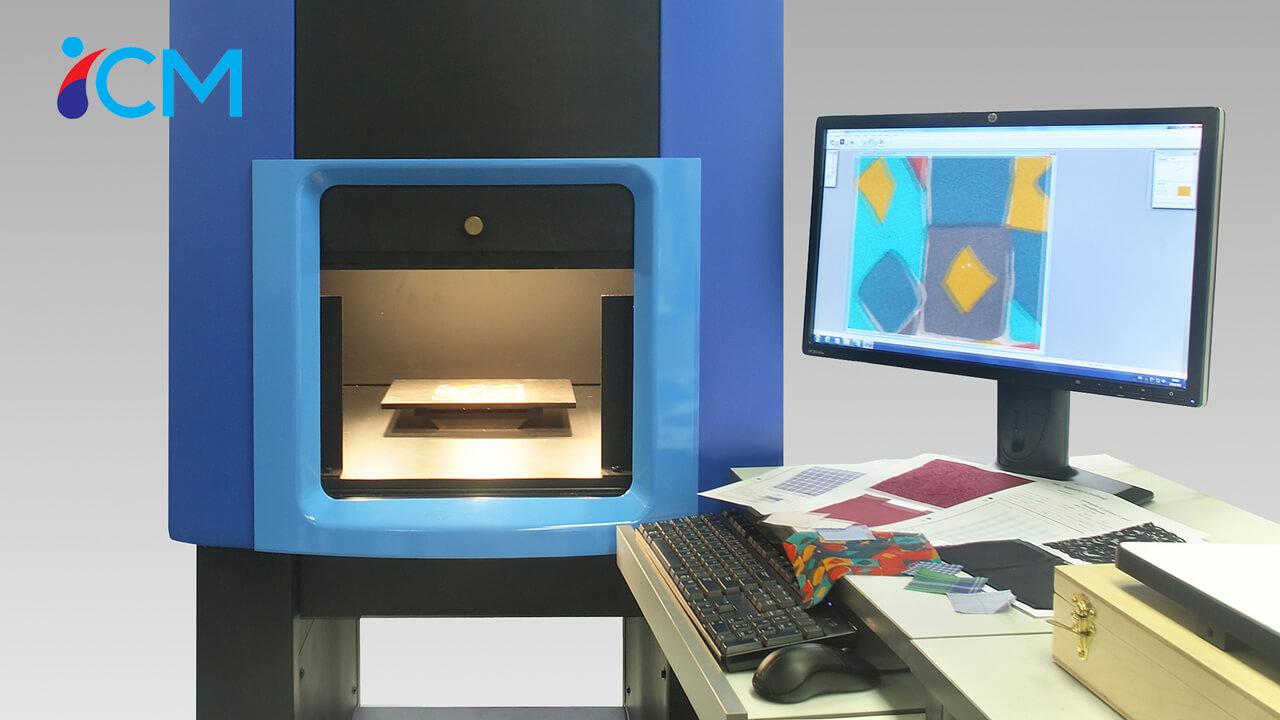

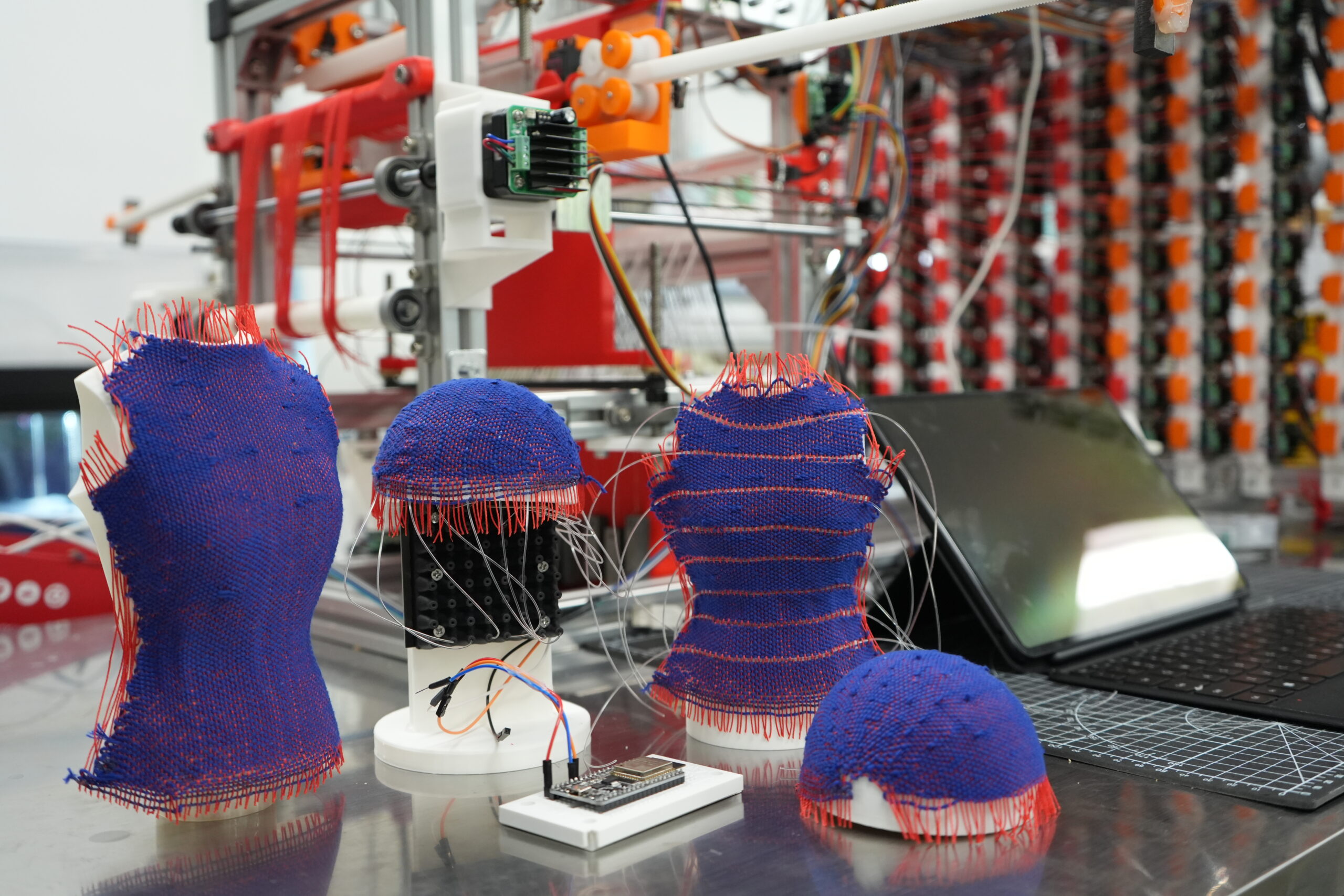

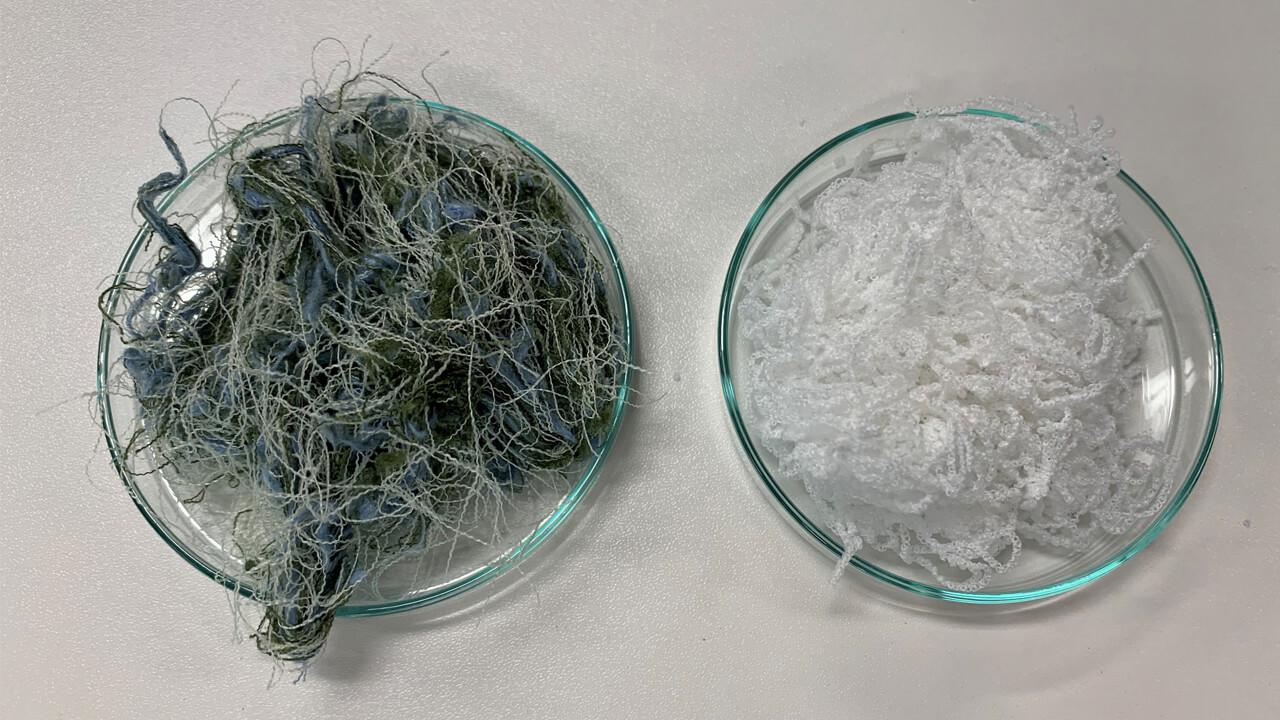

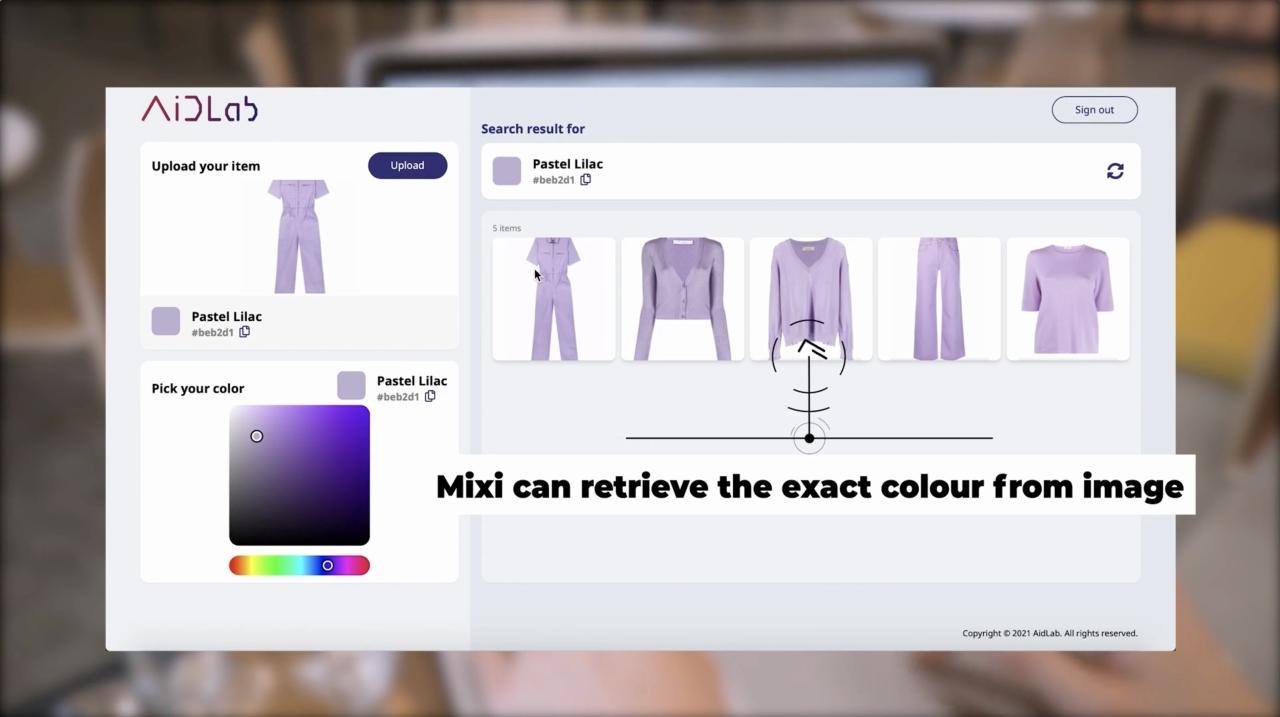

Centre for Transformative Garment Production (TransGP) was established with the collaborative effort of The University of Hong Kong and Tohoku University. The Centre aims to provide solutions for the needs of the future society, where labour shortage would arise from population aging, and where increasing percentage of the mankind will be living in megacities. The Centre also targets at driving paradigm shift for reindustrializing selected sectors, i.e. garment industry, which is still relying on labour-intensive operations but with clear and identified processes for transformation. A number of goals are expected to be achieved through the Centre’s research programmes, such as to leverage the proprietary AI and robotics technology to shorten development cycles, to improve engineering efficiency and to prevent faults and increase safety by automating risky activities.